Innsbruck Pointing at Objects (IPO) Dataset

Deictic gestures – pointing at things in human-human collaborative tasks – constitute a pervasive, non-verbal way of communication, used e.g. to direct attention towards objects of interest. In a human-robot interactive scenario, in order to delegate tasks from a human to a robot, one of the key requirements is to recognize and estimate the pose of the pointing gesture.

Dataset Features

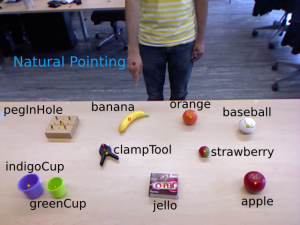

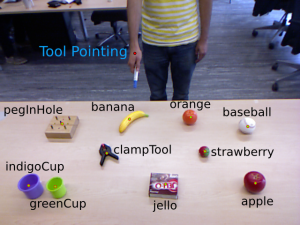

- Two types of pointing gestures: (1) Natural pointing with index finger, and (2) Tool pointing with white board marker.

- 9 participants pointing at 10 objects performing both the types of pointing gestures.

- Pointing gestures recorded with RGB-D with Kinect sensor.

- 180 RGB-D test images available with the ground truth to evaluate 3D pointing direction.

- Publicly available to Download (~100MB).

Sample Images

Marked points (red - hand, green - objects) are the 2D locations used as the ground truth.

Reference

Dadhichi Shukla, Ozgur Erkent, Justus Piater, Probabilistic detection of pointing directions for human robot interaction. International Conference on Digital Image Computing: Techniques and Applications, 2015.PDF.

BibTex

@InProceedings{Shukla-2015-DICTA,

title = {{Probabilistic detection of pointing directions for human

robot interaction}},

author = {Shukla, Dadhichi and Erkent, Ozgur and Piater, Justus},

booktitle = {{International Conference on Digital Image

Computing: Techniques and Applications}},

year = 2015,

month = 11,

publisher = {IEEE},

doi = {10.1109/DICTA.2015.7371296},

url = {https://iis.uibk.ac.at/public/papers/Shukla-2015-DICTA.pdf}

}

Acknowledgement

This research has received funding from the European Community’s Seventh Framework Programme FP7/2007-2013 (Specific Programme Cooperation, Theme 3, Information and Communication Technologies) under grant agreement no. 610878, 3rd HAND.

Contact

dadhichi[dot]shukla[at]uibk[dot]ac[dot]at