Innsbruck Multi-View Hand Gesture (IMHG) Dataset

Hand gestures constitute a natural forms of communication in human-robot interaction scenarios. They can be used to delegate tasks from a human to a robot. To facilitate human-like interaction with robots, a major requirement for advancing in this direction is the availability of a hand gesture dataset for judging the performance of the algorithms.

Dataset Features

- 22 participants performed 8 hand gestures in the context of human-robot interaction scenarios taking place at close proximity.

- 8 hand gestures categorized as:

- 2 types of deictic gestures with the ground truth location of the target pointed at,

- 2 symbolic gestures,

- 2 manipulative gestures,

- 2 interactional gestures.

- A corpus of 836 test scenarios (704 reference gestures with ground truth, and 132 other gestures).

- Hand gestures recorded from two views (frontal and lateral) using an RGB-D Kinect sensor.

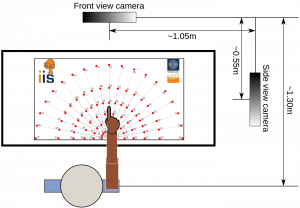

- The data acquisition setup can be easily recreated using a polar coordinate pattern as shown in the figure below to add new hand gestures in the future.

- Soon to be released publicly.

- Currently available for Download (~888MB) with authentication.

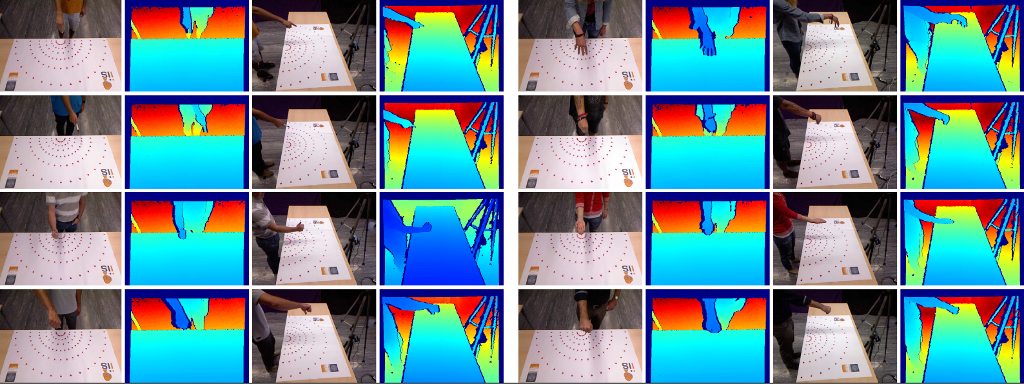

Sample Scenarios

Gestures recorded from frontal and side view. T-B: Finger pointing, Tool pointing, Thumb up (approve), Thumb down (disapprove), Grasp open, Grasp close, Receive, Fist (stop).

Reference

Dadhichi Shukla, Özgür Erkent, Justus Piater, A Multi-View Hand Gesture RGB-D Dataset for Human-Robot Interaction Scenarios. International Symposium on Robot and Human Interactive Communication, 2016 PDF.

BibTex

@InProceedings{Shukla-2016-ROMAN,

title = {{A Multi-View Hand Gesture RGB-D Dataset for Human-Robot Interaction Scenarios}},

author = {Shukla, Dadhichi and Erkent, Ozgur and Piater, Justus},

booktitle = {{International Symposium on Robot and Human Interactive Communication}},

year = 2016,

month = 8,

publisher = {IEEE},

doi = {10.1109/ROMAN.2016.7745243},

note = {New York, USA},

url = {https://iis.uibk.ac.at/public/papers/Shukla-2016-ROMAN.pdf}

}

Acknowledgement

This research has received funding from the European Community’s Seventh Framework Programme FP7/2007-2013 (Specific Programme Cooperation, Theme 3, Information and Communication Technologies) under grant agreement no. 610878, 3rd HAND.

Contact

dadhichi[dot]shukla[at]uibk[dot]ac[dot]at