Home

People

Projects

Research

Publications

Surveys

Courses

Student Projects

Jobs

Downloads

Sidebar

This is an old revision of the document!

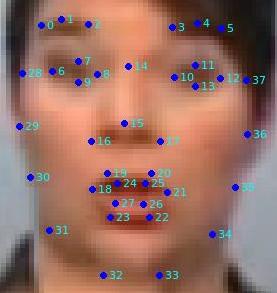

Face shape annotations for the RWTH-PHOENIX corpus

You can download here our face shape annotations – along with the corresponding images – for the seven signers who appear in the RWTH-PHOENIX corpus. The archive contains one directory by signer, each of which contains an “images” subdirectory and a “points” subdirectory. All image files are 300×260 PNG images. All face shape annotation files are ASCII files with the filename extension “.pts”.

Distribution of the annotated images among the signers

In total 369 images from the RWTH-PHOENIX corpus (more precisely from the RWTH-PHOENIX-v02-split01-TRAIN snapshot) have been manually annotated with the face shape.

| Signer | # annotated images |

|---|---|

| Bastienne Blatz | 61 |

| Christian Plugfelder | 53 |

| Karina Orlowski | 42 |

| Kira Knuehmann | 65 |

| Magdalena Meisen | 62 |

| Silke Lintz | 51 |

| Stephanie Prothmann | 35 |

Structure of a face shape annotation file (".pts" file)

For each image the face shape annotation consists of the ordered list of the positions of 38 facial landmarks. Each facial landmark – or face shape point – has two coordinates in the axis system originating at the top-left corner of the image, with the x-axis pointing right and the y-axis pointing down. The actual structure of a “.pts” file is as follows:

version: 1

n_points: 38

{

x_1 y_1

x_2 y_2

...

x_38 y_38

}

A possible interpretation of the shape points as face parts is as follows (using zero-based indexing in the list of points of a “.pts” file):

| Shape point indices | Face part |

|---|---|

| 0-1-2 | upper part of the left eyebrow |

| 3-4-5 | upper part of the right eyebrow |

| 6-7-8-9 | eyelids of the left eye |

| 10-11-12-13 | eyelids of the right eye |

| 14-15 | nasal ridge |

| 16-17 | nasal base |

| 18-19-20-21-25-24 | upper lip |

| 18-23-22-21-26-27 | lower lip |

| 28-29-30-31-32-33-34-35-36-37 | cheeks and chin |

N.B. The original images from the RWTH-PHOENIX corpus do not have square pixels and come in 210×260 image resolution.

Therefore the annotated data provided here should be scaled appropriately if the goal is to build models that are relevant to

the entire corpus from these data. Scaling both the images and the shapes by a factor 210/300

in the horizontal direction only (x-coordinates) will do the trick!