Home

People

Projects

Research

Publications

Surveys

Courses

Student Projects

Jobs

Downloads

User Tools

Site Tools

Sidebar

Probabilistic Models of Appearance for Object Recognition and Pose Estimation

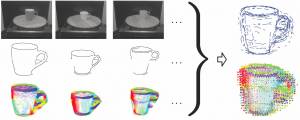

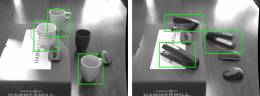

We developed probabilistic models to encode the appearance of objects, and inference methods to perform detection (localization) and pose estimation of those object in 2D images of cluttered scenes. Some of our early work used 3D, CAD-style models (Teney et al. 2011), but we then solely focused on appearance-based models (Teney et al. 2012). Those are trained using 2D example images alone, the goal being here to leverage, to a maximum, the information conveyed by 2D images, without resorting to stereo or other 3D sensing techniques. Our models are identically applicable to either specific object instances, or to object categories/classes (Teney et al. 2013). The appearance is modeled as a distribution of low-level, fine-grained image features. The strength of the approach is its straightforward formulation, applicable to virtually any type of image feature. We have applied the method to different types of such low-level features: points along image edges, and intensity gradients extracted densely over the image.

We developed probabilistic models to encode the appearance of objects, and inference methods to perform detection (localization) and pose estimation of those object in 2D images of cluttered scenes. Some of our early work used 3D, CAD-style models (Teney et al. 2011), but we then solely focused on appearance-based models (Teney et al. 2012). Those are trained using 2D example images alone, the goal being here to leverage, to a maximum, the information conveyed by 2D images, without resorting to stereo or other 3D sensing techniques. Our models are identically applicable to either specific object instances, or to object categories/classes (Teney et al. 2013). The appearance is modeled as a distribution of low-level, fine-grained image features. The strength of the approach is its straightforward formulation, applicable to virtually any type of image feature. We have applied the method to different types of such low-level features: points along image edges, and intensity gradients extracted densely over the image.

Such models of appearance have been applied to the tasks of object detection/localization, object recognition, and pose classification (by matching the test view with one of several trained viewpoints of the object). A notable advantage of the proposed model is its ability to use dense gradients directly (extracted over entire images), versus relying on typical hand-crafted image descriptors. Using gradients extracted at a coarse scale over the images allows us to use shading and homogeneous regions to recognize untextured objects, when edges alone would be ambiguous.

Such models of appearance have been applied to the tasks of object detection/localization, object recognition, and pose classification (by matching the test view with one of several trained viewpoints of the object). A notable advantage of the proposed model is its ability to use dense gradients directly (extracted over entire images), versus relying on typical hand-crafted image descriptors. Using gradients extracted at a coarse scale over the images allows us to use shading and homogeneous regions to recognize untextured objects, when edges alone would be ambiguous.

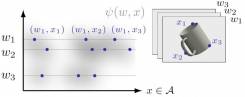

We also proposed extensions of this generative model to perform continuous pose estimation, by explicitly interpolating appearance between trained viewpoints. This makes it one of the rare methods capable of doing appearance-based continuous pose estimation at category level, this capability being usually reserved to methods based on 3D CAD models of objects, and limited to specific object instances.

We also proposed extensions of this generative model to perform continuous pose estimation, by explicitly interpolating appearance between trained viewpoints. This makes it one of the rare methods capable of doing appearance-based continuous pose estimation at category level, this capability being usually reserved to methods based on 3D CAD models of objects, and limited to specific object instances.

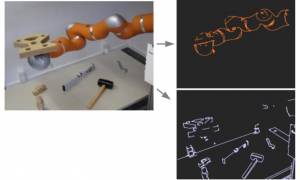

We found an interesting application of these models and methods in the recognition, pose estimation and segmentation of a robotic arm in 2D images (Teney et al. 2013b). This task is very challenging due to the smooth and untextured appearance of the robot arm considered (a Kuka LWR). Moreover, the arm is made of articulated links which are absolutely identical in shape and appearance. Candidate detections of those links in the image are provided by the recognition method, and the known physical (kinematic) constraints between the articulated links are enforced by probabilistic inference. Similarly to the traditional articulated models, those constraints are modeled as a Markov random field, and an algorithm based on belief propagation can then identify a globally consistent result for the configuration of all links.

We found an interesting application of these models and methods in the recognition, pose estimation and segmentation of a robotic arm in 2D images (Teney et al. 2013b). This task is very challenging due to the smooth and untextured appearance of the robot arm considered (a Kuka LWR). Moreover, the arm is made of articulated links which are absolutely identical in shape and appearance. Candidate detections of those links in the image are provided by the recognition method, and the known physical (kinematic) constraints between the articulated links are enforced by probabilistic inference. Similarly to the traditional articulated models, those constraints are modeled as a Markov random field, and an algorithm based on belief propagation can then identify a globally consistent result for the configuration of all links.