Home

People

Projects

Research

Publications

Surveys

Courses

Student Projects

Jobs

Downloads

User Tools

Site Tools

Sidebar

Probabilistic Models for 3D Pose Estimation and Recognition

Motivated by automnomous robots that need to acquire object models and manipulation skills on the fly, we develop learnable object models that represent objects as Markov networks, where nodes represent feature types, and arcs represent spatial relations. These models can handle deformations, occlusion and clutter. Object detection, recognition and pose estimation are solved using classical methods of probabilistic inference.

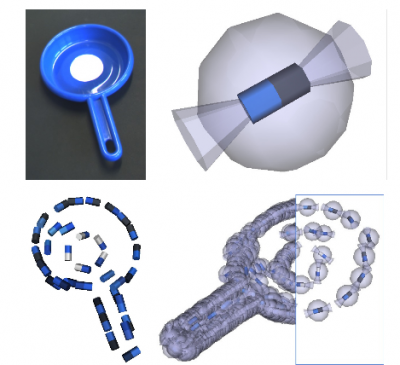

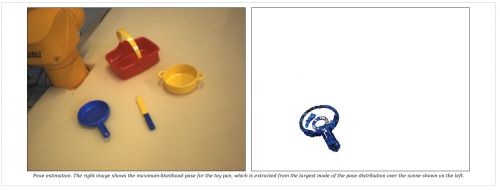

Our visual model is a generative object representation which allows for 6D pose estimation in stereo views of cluttered scenes (Detry 2009). We model the geometry and appearance of object edges. Our model encodes the spatial distribution of short segments of object edges with with one or more density functions defined on the space of three–degree-of-freedom (3DOF) positions and 2DOF orientations. Evidence for these short segments is extracted from stereo imagery with a sparse-stereo edge reconstruction method (Krüger et.al. 20041)); evidence is turned into edge densities through kernel methods.

Object appearance – mainly color – is modeled by searching for clusters in the object appearance domain, then defining a different edge distribution for each color. The object model effectively consists in a Markov network containing one latent variable that represents the object pose, and a set of latent variables for the edge densities described above. Edge densities readily allow one to compute the 6DOF pose distribution of an object within an arbitrary scene by propagating scene evidence through the model. Pose inference is implemented with generic probability and machine learning techniques – belief propagation, Monte Carlo integration, and kernel density estimation.

Acknowledgments

This work is supported by the Belgian National Fund for Scientific Research (FNRS) and the EU Cognitive Systems project PACO-PLUS (IST-FP6-IP-027657).